We deliver cutting-edge projection mapping with real-time tracking integration, enabling dynamic media to be presented on moving 3D surfaces.

Our solutions go beyond static installations and pre-rendered sequences. We unlock projection that responds in the moment, without sacrificing accuracy, resolution, colour fidelity or performance.

This ensures seamless media aligned to real-world objects, even as they move freely in space.

At the heart of this dynamic projection mapping capability is mesh mapping. This is a technique that allows flat media to be wrapped onto complex 3D geometry (or “mesh”). Media is authored to a UV map - a flattened 2D representation of a 3D mesh. Think of it like peeling an orange and laying it flat, then painting the flattened peel, and finally wrapping the peel back around the orange.

When rendered, the content conforms precisely to the object’s form, with every media pixel’s position in the projected image calculated based on surface orientation and projector perspective.

Using a spatially calibrated scene, projectors are defined relative to the 3D space, and so is the mesh, with the help of motion capture tracking technology. Our system computes how the mesh appears from each projector’s point of view, accounting for position, rotation, and other optical characteristics such as throw ratio. This ensures pixel-perfect alignment between the media and the physical object.

As the object moves, a motion capture or other tracking system feeds real-time positional and rotational data to the media servers. That data dynamically updates the virtual mesh’s location and orientation in the scene. The projector-to-mesh calculations are continuously refreshed to reflect the object’s new pose, on a per-frame basis.

The UV mapping remains fixed, so the only variables are the object's position, and consequently which surfaces of the object are visible to each projector. Our media server engine updates frame playback and surface mapping accordingly, preserving the illusion that the media is painted onto the object, no matter how it moves.

We’re tracking-system agnostic and projector-agnostic. Whether working with optical motion capture, IR-based systems, LiDAR, encoder feedback from mechanical systems, or other spatial tracking technologies, our solution adapts to your preferred infrastructure.

Likewise, we work with any projector brand or model, from compact short-throws to large-format dome projectors.

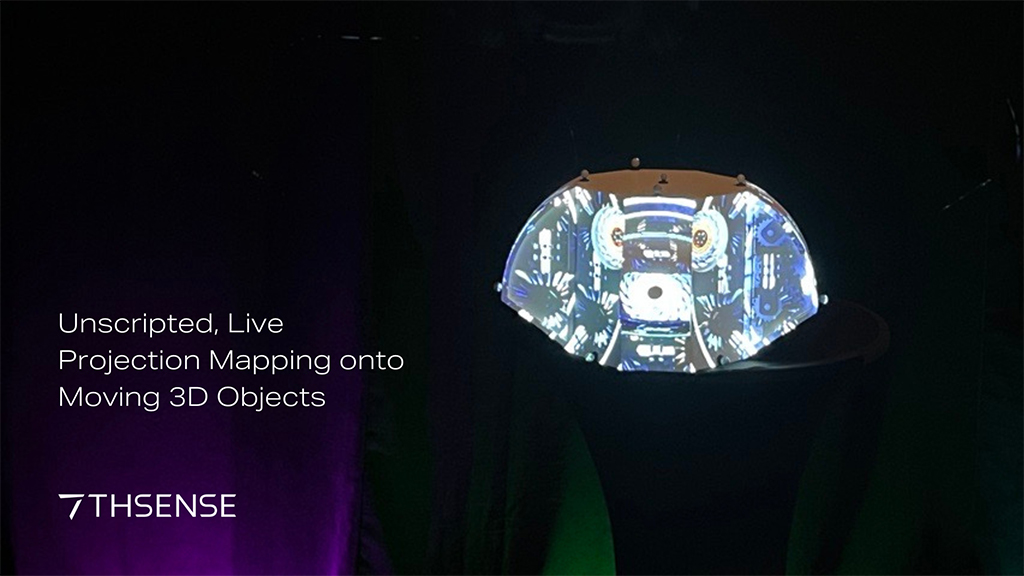

To prove what’s possible, we showcased a world-first application of real-time, unscripted projection mapping on freely moving 3D objects, live, and for audiences to see for themselves.

Using two projectors and a trio of tracked sculptural objects, we demonstrated continuous, reactive mapping without predefined motion paths. As the objects moved - lifted, rotated, spun - the projected media held perfect lock, even during sudden shifts in orientation.

The system processed tracking data in real time, recalculating projection alignment and rendering the next frame on-the-fly. There were no baked sequences, no predictive modelling, and no operator intervention. Just high-resolution, low-latency, real-time mapped media that followed the physical objects with sub-frame accuracy.

This demo showcased our platform’s power, precision, and versatility in a demanding live environment, and earned us industry recognition for pioneering projection mapping that breaks the static ‘norm’.

From motion-aware media installations to reactive stage design, 7thSense brings projection mapping to real-time, dynamic, untethered applications.